Backup Whole Filesystem

The simplest solution is to make whole filesystem dump and store it using one big tar file. This solution has few drawbacks:

- Making backup is slow and consumes huge amount of server resources

- Transferring this backup off-site consumes your bandwitch (you pay for it), so you cannot do it very often

- Restoring backup is slow (you have to transfer back the dump)

How can we do frequent backups without above problems? There's an answer:

Incremental Backup

What is an incremental backup? It's method of creating archive that collects only changed files since last backup. During typical operating system usage only small percent of files changes (database files, logs, cache etc.), so we can backup only those files.

Second optimisation we can use is to backup only files that can't be reproduced from operating system repositories (for example: most binary files in /usr/bin come from operating system packages and we can easily recreate them by using standard operating system installation methods. Third optimisation is to skip files that will be automatically regenerated by our application (cache) or doesn't play critical role for our system (some debug logs laying around). Let's see some code that can do such task:

/home/root/bin/backup-daily.sh:

function backup() {

tgz_base_name=$1

shift

_lst_file=$DIR/$tgz_base_name.lst

_time=`date +%Y%m%d%H%M`

if test -f $_lst_file

then

_tgz_path=$DIR/$tgz_base_name-$_time-incr.tgz

else

_tgz_path=$DIR/$tgz_base_name-$_time-base.tgz

fi

tar zcf $_tgz_path -g $_lst_file $*

}

backup etc etc

backup home home

backup var var

Automated Backup

If you're manually doing backups I'm sure you will forget about them. It's a boring task and IMHO should be delegated to computer, not to a human. UNIX world have very sophisticated and very helpful daemon called cron. It's responsible for periodically executing programs (typical maintenance tasks done in your system: rotating logs for example). Let's see crontab entry for backup task: 31 20 * * * nice ~/bin/backup-daily.sh Backup creation will be executed every day 20:31. The next step is to collect those backup outside the server. It's easy. Setup similar cron task on separate machine that will rsync (use SSH keys for authorisation) your backups off-site.

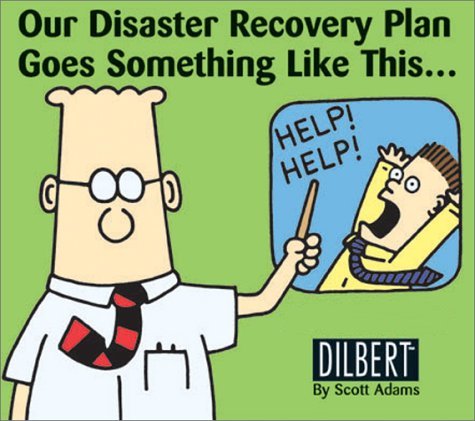

Test If Your Backup Is Working Properly

So: you've just created automatic backup procedure and you want to stand still. DON'T!!! Your backup must be checked if it's sufficient to restore all services you're responsible for after a disaster. The best way to do that is to try restore your systems on a clean machine. We have, again, few options here:

- setup dedicated hardware and install here clean system

- setup virtual server using virtualisation technology

- use chroot to quickly setup a working system

Options are ordered from "heaviest" to "easiest". Which one to choose? Personally chroot is my preferred option. You can easily (well, at least under Debian) setup "fresh" system and test restore procedure.